Discovery at Carlson·25 Smarts, Artificial and Human

Smarts, Artificial and Human

How much should you trust Alexa? Will you let it tell you what to do next? In a sense, these are questions Alok Gupta believes we all should be asking.

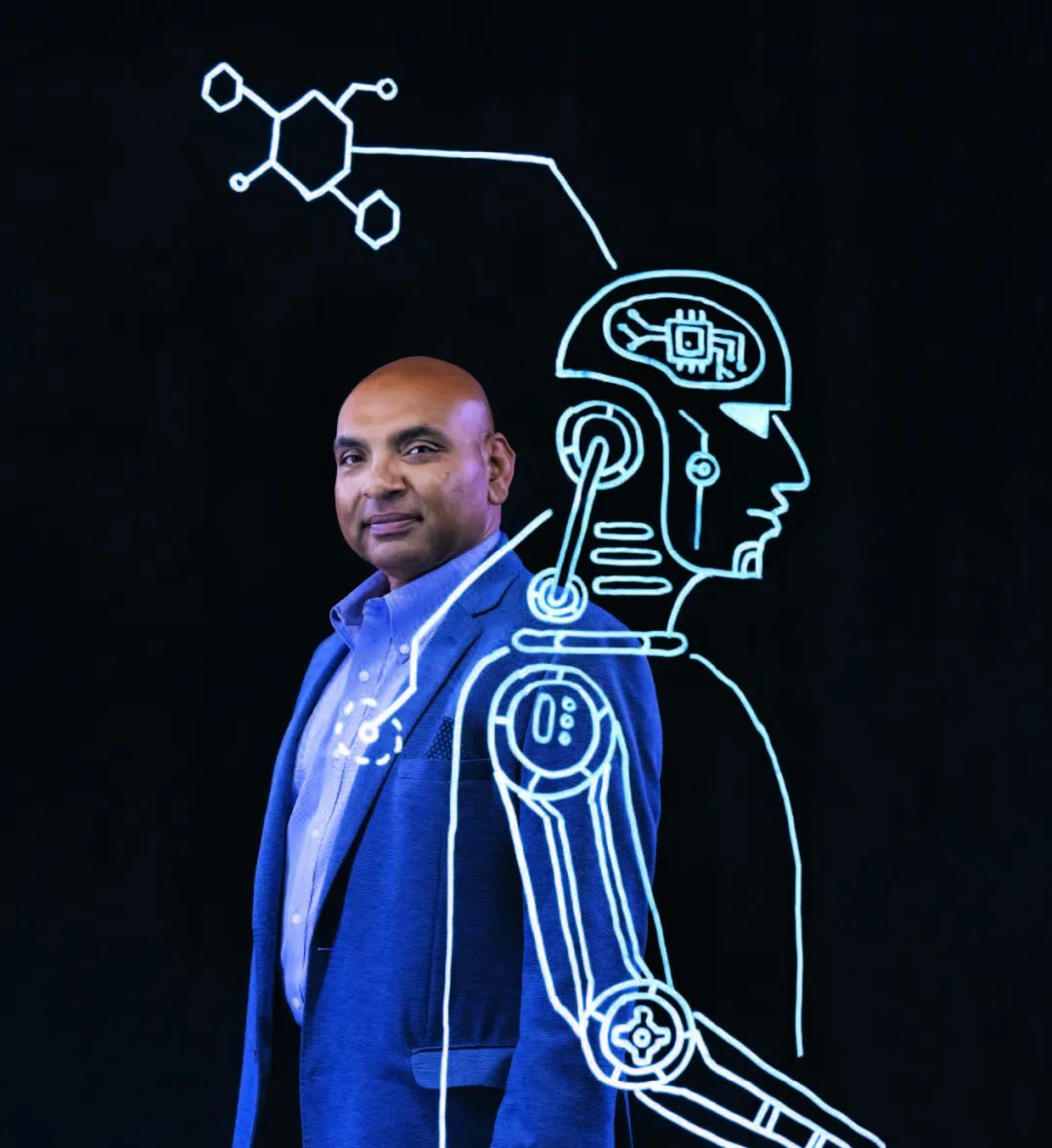

In two recent research papers, Gupta, the Curtis L. Carlson Chair in Information Management and the Carlson School’s Senior Associate Dean of Faculty and Research, tested how humans and machines work together. His findings regarding artificial intelligence (AI) demonstrate why humans shouldn’t rely too much on machines for making decisions.

In a paper published in the September issue of MIS Quarterly, Gupta provocatively asks whether “humans-in-theloop will become Borgs.” The term “humans-in-the-loop” refers to hybrid work environments where humans and AI “machines” collaborate. A second paper, which will appear in a future issue of Information Systems Research, demonstrates that humans have cognitive limitations about their metaknowledge—that is, our knowledge or assessment about what we don’t know. This limitation makes it difficult for us to delegate knowledge work to machines, even when we should. Gupta co-authored both papers with Andreas Fügener, Jörn Grahl, and Wolfgang Ketter, all members of the University of Cologne’s Faculty of Management, Economics, and Social Sciences.

AI is no longer sci-fi. More and more businesses have incorporated AI and machine learning into their processes in order to make better decisions relating to contracts, supply chains, and consumer behavior. Scholars have been studying humans-in-the-loop work environments for some time--Gupta himself has conducted research in this area. But he notes that studies have tended to focus “on short-term performance and not what happens in longer-term decision-making processes.”

As these papers show, those longer-term effects aren’t all positive. In fact, “simulation results based on our experimental data suggest that groups of humans interacting with AI are far less effective as compared to human groups without AI assistance,” Gupta says.

Where reliance on AI-assisted decision-making can be particularly deficient is in the development of new solutions that can generate genuine innovation. “Humans have an uncanny ability to connect the seemingly unconnected dots to come up with solutions that are not generated by linear thinking,” Gupta notes. “Most innovations occur when humans face challenges in their day-to-day environments. The more humans are removed from any environment, the more unlikely it will become to innovate in that particular space or to make accidental discoveries.”

“We need to ‘teach’ future knowledge workers...how to assess their own limitations...so that they can effectively work with AI-based machines."

In other words, by relying too much on AI for decisionmaking, we are in danger of surrendering our greatest strength—the capacity to see things in our own unique ways. We also risk not being able to leverage the insights that other people can provide.

On the other hand, the co-authors’ newest paper notes that being unaware of what we don’t know limits AI’s usefulness. This fundamentally limits how well we can collaborate with AI. There are times when humans need to, in a sense, “defer” to the machines. The authors’ research shows that humans and AI improve their collaborative performance when AI delegates certain decision-making tasks to humans, not the other way around.

All this certainly doesn’t mean AI isn’t a highly useful tool. However, the rush to adopt AI-based technologies to replace or augment human work has its perils. An approach to human-AI interaction that Gupta and his co-authors recommend is what they call “personalized AI advice.” This means designing an AI system that can observe an individual’s decisionmaking and assess where its “advice” falls short, based on an individual’s decision patterns. “It can then set an appropriate level of advice that optimizes the joint performance of the human plus AI while retaining human individuality and uniqueness,” Gupta says.

“The implication of our work,” he adds, “is that we need to ‘teach’ future knowledge workers not just about new competencies but also about how to assess their own limitations in those competencies so that they can effectively work with AI-based machines.”

As Gupta notes, “there has been a lot of talk about AI taking over jobs. Many researchers feel that this estimate is overblown.” Human creativity and insight will always be necessary. There’s a great deal AI can and will do to help us make better decisions. But as Gupta’s work suggests, we humans (and not Alexa) should have the final say, using our distinctive capacity for judgment.

智能、人工和人类

你信任人工智能Alexa吗?你会让它来替你决定下一步计划吗?

Alok Gupta认为,所有人都应该思考这些问题。

Alok Gupta是卡尔森学院信息管理专业Curtis L. Carlson Chair、教研室高级副主任。他最近的两篇论文研究了人机协作,其研究结果表明,人类不应在决策中过多地依赖机器。

在《管理信息系统季刊》9月号上发表的一篇论文中,Gupta略带挑衅地提问:“人机回圈会变成机器吗?”术语“人机回圈”是指人类和人工智能“机器”协作的混合工作环境。他的第二篇论文近期将发表在《信息系统研究》杂志,该论文的研究结果说明,人类对元知识有认知上的限制。即,对不了解的事物的认知和评价。出于这种限制,我们很难将知识类工作委派给机器,即使这种委派是必要的。这两篇论文的合著者是来自科隆大学管理、经济和社会科学学院的Andreas Fügener、Jörn Grahl和Wolfgang Ketter。

人工智能不再是科幻小说。越来越多的企业开始应用人工智能和机器学习,用于协助合同、供应链和消费者行为方面的决策。学者们早已开始研究“人机回圈”工作环境,Gupta就是其中之一。但他指出,研究往往侧重于“短期表现,而非对决策过程的长期影响”。

正如上述两篇论文所示,其长期影响并不都是积极的。事实上,“实验模拟的结果显示,有人工智能协助的人类群体,其效率远远低于没有人工智能协助的人类群体。”Gupta表示。

能够真正实现创新的解决方案,往往来自于非常缺乏AI协助的地方。Gupta指出:“人类有一种不可思议的能力,能将看似不相干的事物联系起来,提出非线性思维的解决方案。大部分的创新源于人类在日常环境中所遭遇的挑战。如果人类远离某个环境,那么该环境不太可能出现创新、或得到意外发现。”

换言之,过分依赖人工智能进行决策,意味着放弃我们最大的优势——人类看待事物的独特视角,并放弃使用其他人提供的洞察。

合著者的最新论文还指出,不知道我们不知道的事物,这限制了人工智能的作用,从根本上限制了人机协作的程度。有些时候,人类需要“遵从”机器的安排。研究表明,当人工智能向人类委派决策任务时,人机协作的表现反而会得到改善。

当然,这些研究成果并不否认人工智能作为工具的效用。但是,急于应用人工智能来取代或补强人类的工作,可能带来负面后果。Gupta和论文合著者推荐了一种人机协作的方式——所谓的“个性化的人工智能建议”。即,人工智能系统观察人类个体的决策,然后基于该个体的决策模式,评估人工智能是否给出了足够的“建议”。“然后,人工智能设定一个适当的建议水平,在保留人类个性和独特性的同时,提高人机协作的整体表现。”

Gupta进一步补充说,“我们的研究发现,我们需要‘教’未来的知识工作者。不仅要教他们新的技能,还要教他们如何评估自己在这些技能方面的限制,从而更有效地与基于人工智能的机器进行协作。”

“所谓人工智能将取代人类的说法一直甚嚣尘上,但在许多研究人员看来,这种估计被夸大了。”人类的创造力和洞察力永远都是必要的。在协助人类决策方面,人工智能可以做很多、并将会做很多。但正如Gupta的研究所示,最终的发言权应当属于拥有独特判断能力的人类,而非Alexa。

ALOK GUPTA

Senior Associate Dean, Faculty and Research